Dear everybody re ChatGPT etc,

The word you need that you don't know you need is CONFABULATION.

What y'all are calling "hallucination" is, in neurology and psychology (where it means two slightly different things) called "confabulation".

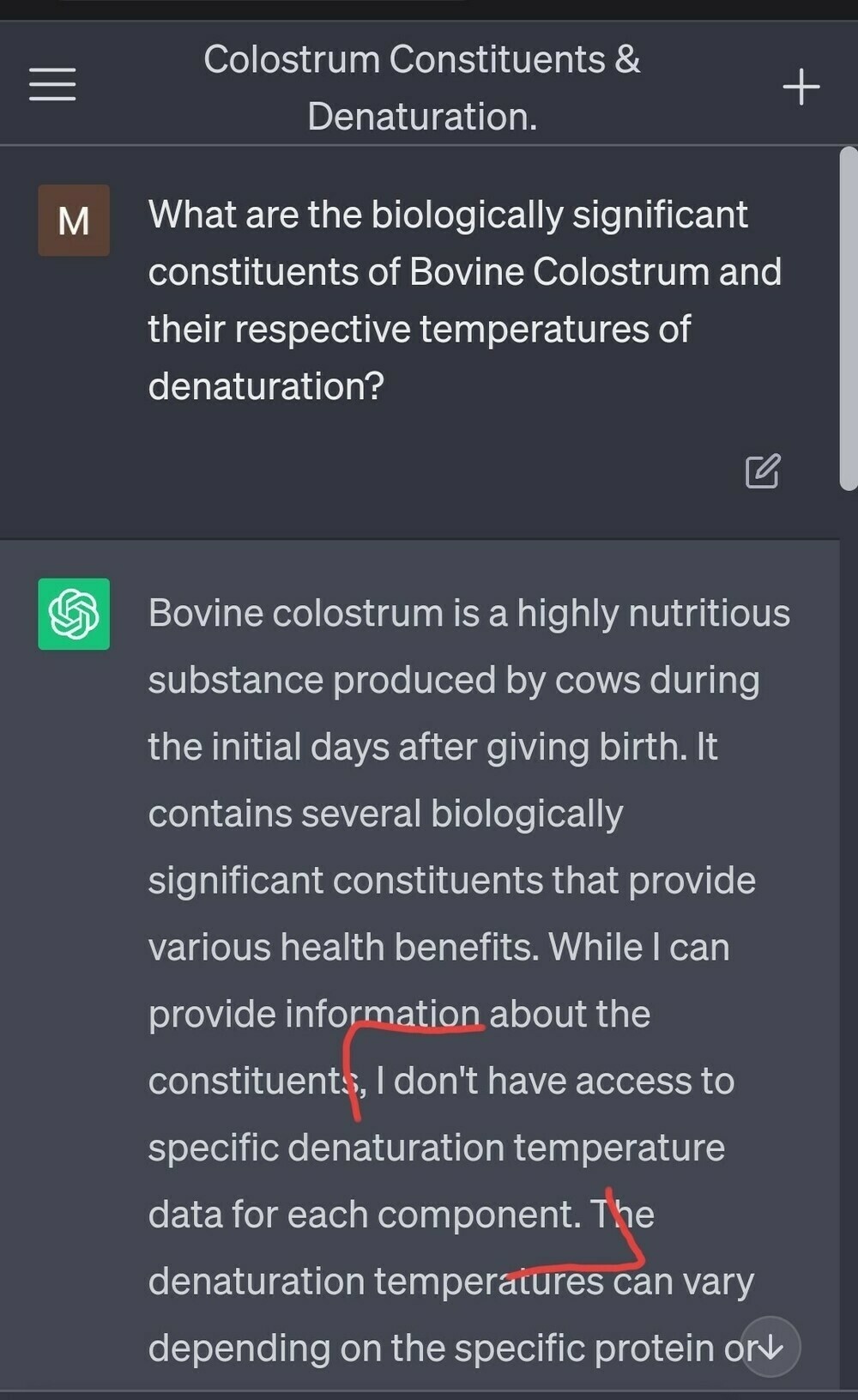

I means when somebody's just making up something and has no idea that they're making things up, because their brain/mind is glitching.

A lot of folks are both trying to understand the AI chatbots and are trying to grapple with the possible implications for how organic minds work, by speculating about human cognition. Y'all should definitely check into the history of actual research into this topic, it will make your sock roll up and down, and blow your minds. And one of the key areas will be surfaced with that keyword.

There have been a bunch of very clever experiments that have been done on humans and how they explain themselves which betrays that there are parts of the mind that are surprisingly - and even alarmingly - independent.

Frex...